Maintenance Scheduling

Overview

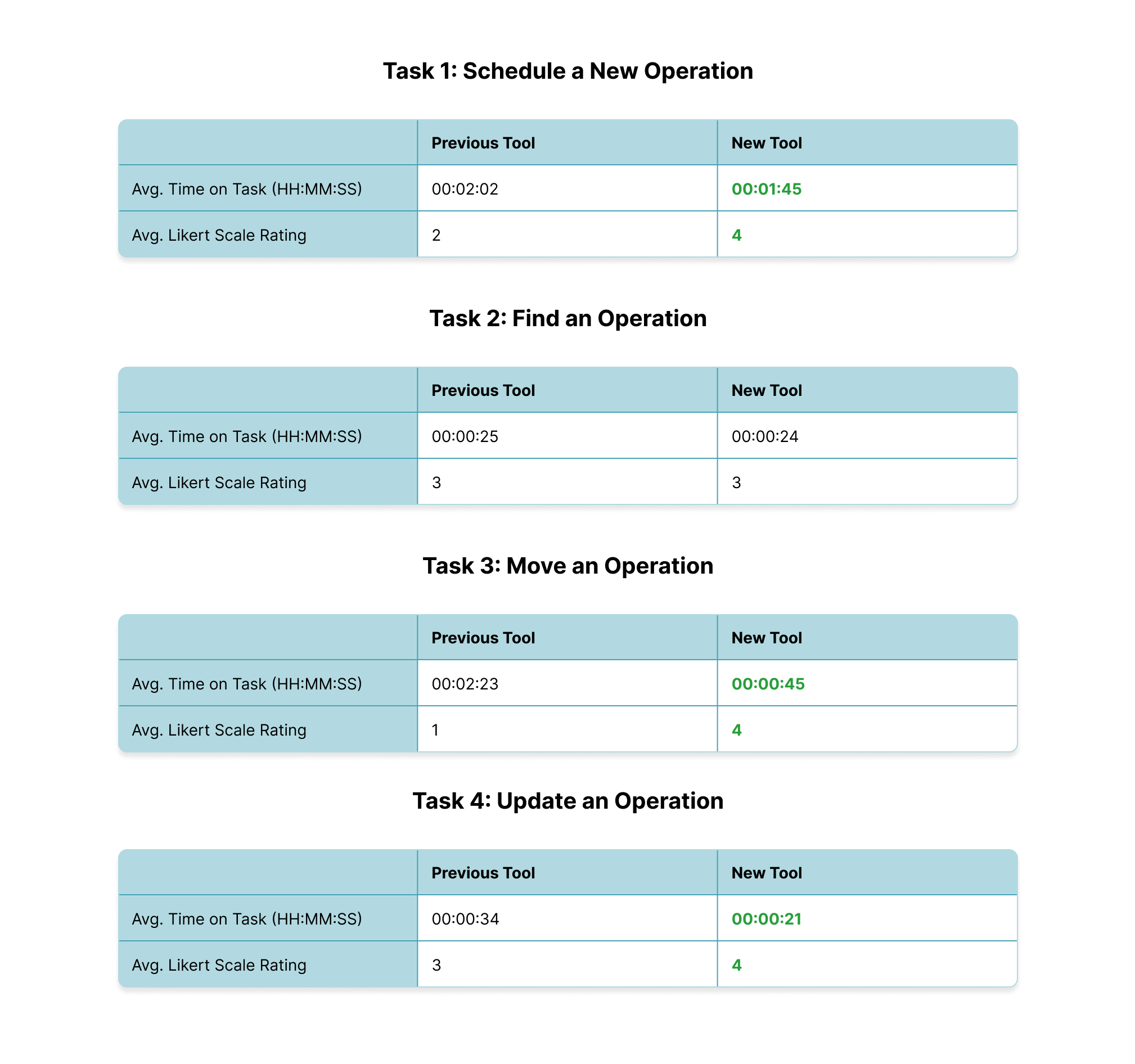

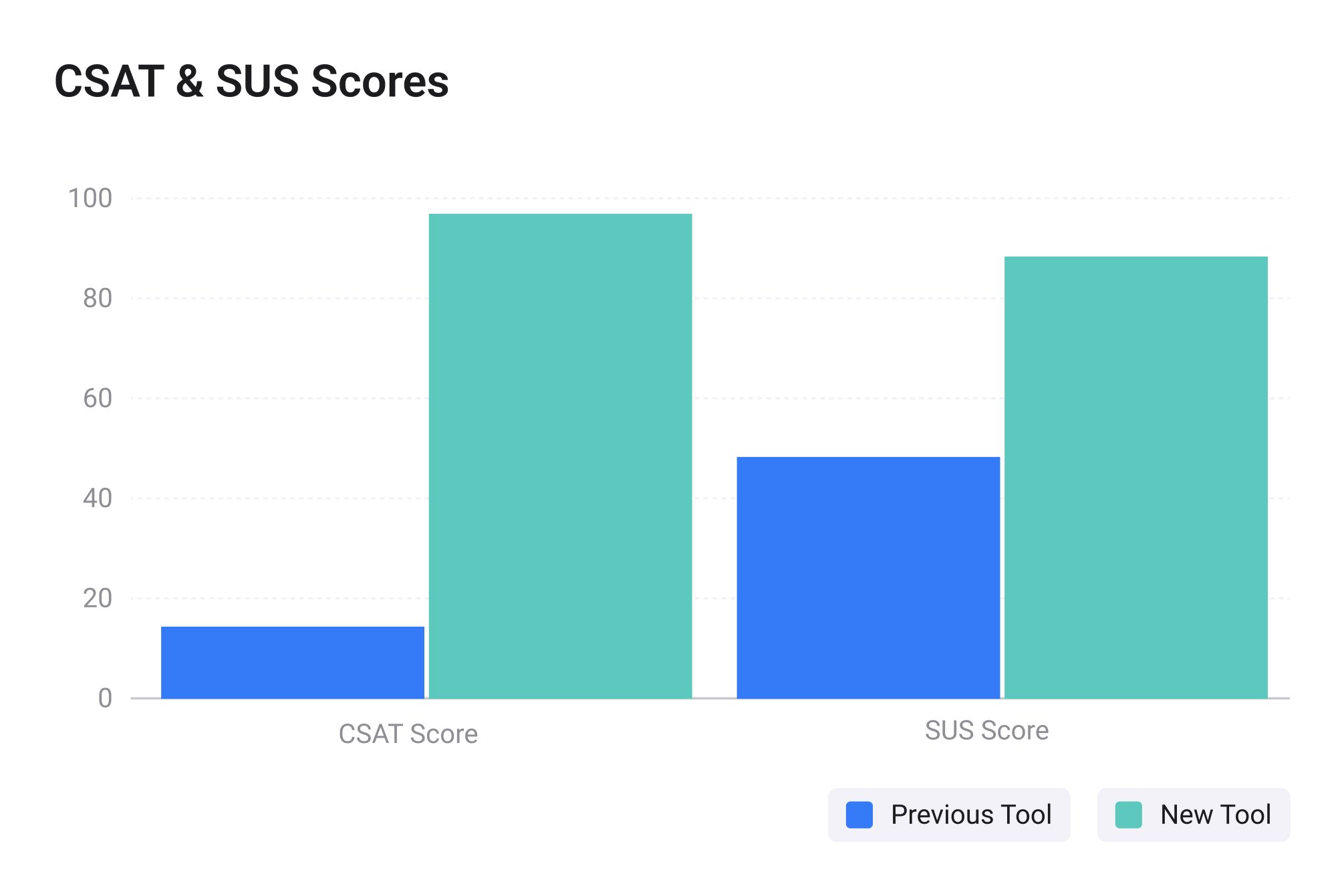

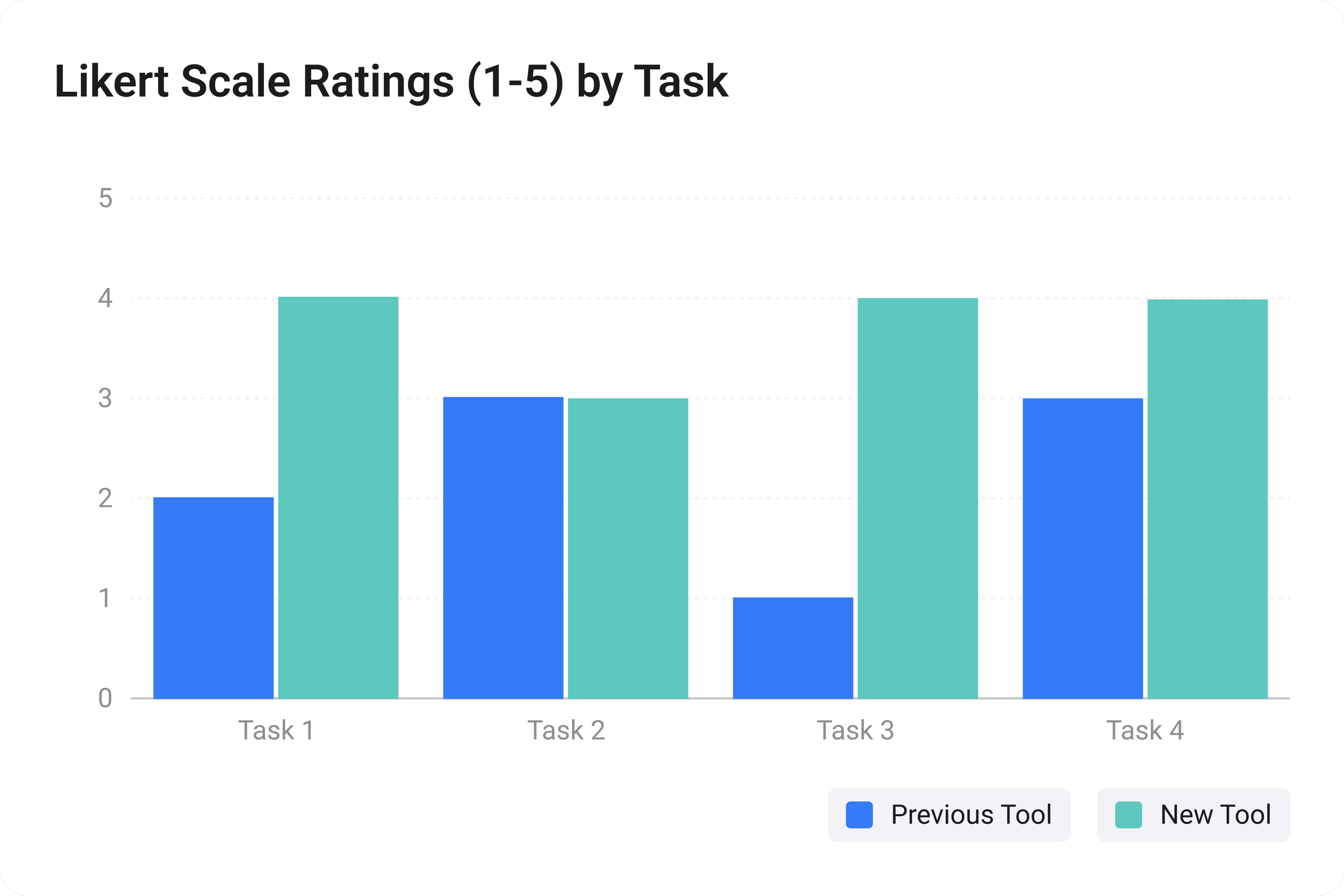

Project In manufacturing, maintenance is key for reusable and lucrative operations. However, scheduling maintenance and repairs can be difficult in some industries where maintenance tasks can take days or months, and can be unprecedented at a large scale. This project covers discovery research about existing scheduling systems for an auto repair company and benchmarking new scheduling systems to determine their impact.

Note: Some information may be generalized, changed, or redacted for nondisclosure agreements.